Ten university teams have been selected to participate in the live interactions phase of the Alexa Prize SimBot Challenge. As of February, those teams have advanced to the semifinals based on their performance during the initial customer feedback period. Alexa customers can interact with their SimBots by saying "Alexa, play with robot" on Echo Show or Fire TV devices. Your ratings and feedback help the student teams improve their bots leading up to the competition finals.

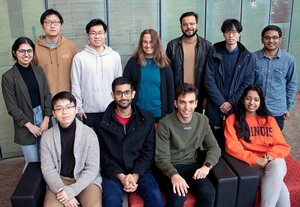

A team from the University of Illinois Urbana-Champaign, KingFisher, includes several graduate and undergraduate students and is advised by Illinois Computer Science professor Julia Hockenmaier.

"It is really exciting to see the enthusiasm and creativity of all ten university teams participating in this interactive embodied AI challenge," said Govind Thattai, Alexa AI principal applied scientist. "The teams have developed very impressive bots to solve the vision and language challenges for robotic task completion. We look forward to the tougher competition during this semifinals phase and how the teams will rise to the challenge."

The Alexa Prize is a unique industry-academia partnership program which provides an agile real-world experimentation framework for scientific discovery. University students have the opportunity to launch innovations online and rapidly adapt based on feedback from Alexa customers.

“The SimBot Challenge is focused on helping advance development of next-generation assistants that will assist humans in completing real-world tasks by harnessing generalizable AI methodologies such as continuous learning, teachable AI, multimodal understanding, and common-sense reasoning,” said Reza Ghanadan, a senior principal scientist in Alexa AI and head of Alexa Prize. “We have created a number of advanced software tools, as well as robust conversational and embodied AI models and data, to help lower the barriers to innovation for our university teams while accelerating research leveraging Alexa to invent and validate AI assistants capable of natural, reliable human-AI interactions.”

The 10 university teams selected to participate in the live interactions phase challenge are:

|

Team name |

University |

Student team leader |

Faculty advisor |

| Symbiote | Carnegie Mellon University | Nikolaos G. | Katerina Fragkiadaki |

| GauchoAI | University of California, Santa Barbara | Jiachen L. | Xifeng Yan |

| KingFisher | University of Illinois | Abhinav A. | Julia Hockenmaier |

| KnowledgeBot | Virginia Tech | Minqian L. | Lifu Huang |

| SalsaBot | Ohio State University | Chan Hee S. | Yu Su |

| SEAGULL | University of Michigan | Yichi Z. | Joyce Chai |

| SlugJARVIS | University of California, Santa Cruz | Jing G. | Xin Wang |

| ScottyBot | Carnegie Mellon University | Jonathan F. | Yonatan Bisk |

| EMMA | Heriot-Watt University | Amit P. | Alessandro Suglia |

| UMD-PRG | University of Maryland | David S. | Yiannis Aloimonos |

The SimBot Challenge included a public benchmark phase (which ran from January through April 2022) and is now in the live interactions phase (December 2022 to April 2023). Participants in both phases build machine-learning models for natural language understanding, human-robot interaction, and robotic task completion.

During the live interactions challenge phase, university teams are competing to develop a bot that best responds to commands and multimodal sensor inputs from within a virtual world. Similar to previous Alexa Prize challenges, Alexa customers participate in this phase as well.

In this case, customers interact with virtual robots powered by universities’ AI models on their Echo Show or Fire TV devices, seeking to solve progressively harder tasks within the virtual environment. After the interaction, they may provide feedback and ratings for the university bots. That feedback is shared with university teams to help advance their research.

TEACh dataset

In conjunction with the SimBot Challenge, Amazon publicly released TEACh, a new dataset of more than 3,000 human-to-human dialogues between a simulated user and simulated robot communicating with each other to complete household tasks.

In TEACh, the simulated user cannot interact with objects in the environment and the simulated robot does not know the task to be completed, requiring them to communicate and collaborate to successfully complete tasks. The public benchmark phase of the SimBot Challenge which ended in June 2022 was based on the TEACh dataset Execution from Dialog History (EDH) benchmark which evaluates a model’s ability to predict subsequent simulated robot actions, given the dialogue history between the user and the robot, and past robot actions and observations.

About the KingFishers

Neeloy C. — Team leader

Neeloy is a second year PhD student in the Human-Centered Autonomy Lab at UIUC studying robotics and artificial intelligence. Some of his research works include applying reinforcement learning (RL) to dense robot crowd navigation, tackling sparse reward RL problems, vehicle anomaly detection, and human-robot interaction tasks in vehicle cockpits. Throughout his higher-level education, he has gained industry experience from companies such as Anheuser-Busch, Qualcomm, Brunswick, and Ford. He has also been a teaching assistant for the Introduction to Robotics class at UIUC, aiding students to learn the fundamentals of robotics in a laboratory setting. Neeloy is excited to apply what he has learned from other problem settings to the embodied AI task, and gain experience in computer vision and natural language processing.

Abhinav A.

Abhinav is a first year Statistics (Concentration: Analytics) Graduate student at UIUC with 5 years of experience at Verizon as a Data Scientist. He completed his undergraduate in Computer Science & Engineering (Concentration: AI) in 2016 from Lovely Professional University, India. He has worked as a DevOps Administrator, Applications Developer, Real Time Streaming Data Engineer along with experience in Data Science. In that space, he has worked on Predictive Modeling, NLP, Anomaly Detection, Sequence Mining, XAI, and CV. He has been a Microsoft Student Ambassador in 2014-2015 and a AI6 city ambassador of Hyderabad, India in 2018.

Blerim A.

Blerim is a 3rd-year undergraduate studying computer engineering at UIUC and a recent transfer from the College of DuPage. His research interests include computer vision and perception with applications in robotics. His main areas of expertise include embedded systems and electronics with applications in robotics and IoT. He has worked as an embedded security intern at Pacific Northwest National Laboratory creating machine learning models for network security within IoT devices. He has also led the development of a mining robot for the NASA Lunabotics Competition which leveraged ROS, Realsense cameras and various other electronics.

Peixin C.

Peixin is an Electrical and Computer Engineering Ph.D. student at UIUC in the area of Robotics and Artificial Intelligence. His research interests are embodied language understanding, robotics, and reinforcement learning. His works involve developing embodied vision-based spoken language understanding agents for robotic systems using reinforcement learning. He also has experience in learning-based robotic navigation in both static and dynamic environments. He is familiar with robotic simulation and has designed and created multiple OpenAI Gym environments based on PyBullet and AI2Thor. He is also familiar with deep learning packages such as TensorFlow and Pytorch.

Haomiao C.

Haomiao is an undergraduate student at UIUC studying statistics, computer science and physics. He is interested in machine learning, robotics, NLP and computer vision. Haomiao has experience working on computer vision projects focusing on 3D structure reconstruction. Haomiao also has experience with implementing, training, and optimizing different machine learning models. Haomiao has some previous experience in NLP, applying semi-supervised learning in language classification. Haomiao is interested in all kinds of NLP models and applications and is willing to learn and explore more through the project.

Runxiang (Sam) C.

Sam is a third year Computer Science PhD student at UIUC. I work on machine learning, currently focusing on multimodal learning. Previously, Sam researched on reliability of distributed systems, specifically on misconfiguration-related failure prevention. Sam obtained a Bachelor of Science in Computer Science from UC Davis in 2019, where he worked on multimodal machine translation, conversational AI, and software data analysis.

Jongwon P.

Jongwon is an undergraduate student at UIUC majoring in Computer Science. Jognwon's interest lies in the intersection of NLU and Multimodal Learning, envisioning weakly supervised models that assimilate the functionalities of the brain. I am passionate about the attention mechanism employed by transformers and their applications outside language tokens. Jognwon's prior experience includes creating a BERT model that simulates the day-and-night continual learning process of the brain for text summarization. Outside the ML research, Jognwon develops websites (fullstack) and deploys ML strategies for quantitative trading in the cryptocurrency space.

Devika P.

Devika is a third-year undergraduate at UIUC majoring in Computer Science. She has previously interned at Motorola Solutions as a Software Engineering Intern working on their predictive analytics team developing machine learning algorithms for mission-critical radio networks. Devika has also interned at Apple on the Siri Product team within their ML organization working on optimizing resources for best performance using data analytics and ML models. Previously, her research interests have included packet-scheduling in high criticality networks and theoretical topology

Nikil R.

Nikil is an undergraduate studying Mathematics and Computer Science at UIUC. His research interests are primarily in natural language processing and computer vision. He has experience working on multiple NLP sub-areas such as topic modeling, semantic similarity, keyphrase extraction and generation, and text embeddings. He also has experience training,

testing, optimizing and deploying deep learning models (involving computer vision and time series forecasting) using the power of high performance computing, with applications to diverse domains including astrophysics, spectroscopy and cancer research. In addition, he has some familiarity with AWS, having used it in projects involving NLP and machine learning.

Risham S.

Risham is a Computer Science PhD student at UIUC in the area of Artificial Intelligence. Her current interests are grounding and multimodal networks and she is working on a similar goal-oriented dialogue task on a Commander model on the Minecraft Dialogue Corpus. She also has experience working on a range of NLP projects including evaluating the faithfulness of grounded representations and their training dynamics within VQA, information extraction from scientific papers, annotating, creating, and updating datasets, and text classification and generation.

Kulbir S.

Kulbir is currently pursuing a PhD at UIUC where he works to integrate the Alexa API with Agricultural robots to enable remote voice control of CNC based gardening robots. Kulbir's interest in Robotics was fostered during undergraduate studies in Electrical Engineering. During Kulbir's masters in robotics at the University of Maryland, he built a solid theoretical foundation in computer science and robotics, by taking core robotics courses focussing on Planning and Perception for Autonomous Systems, ROS and Decision Making.

Julia Hockenmaier — Faculty advisor

Julia Hockenmaier is a full professor in the Department of Computer Science at the University of Illinois at Urbana-Champaign. Her main area of research is computational linguistics or natural language processing.

Read the original article from Amazon Science.